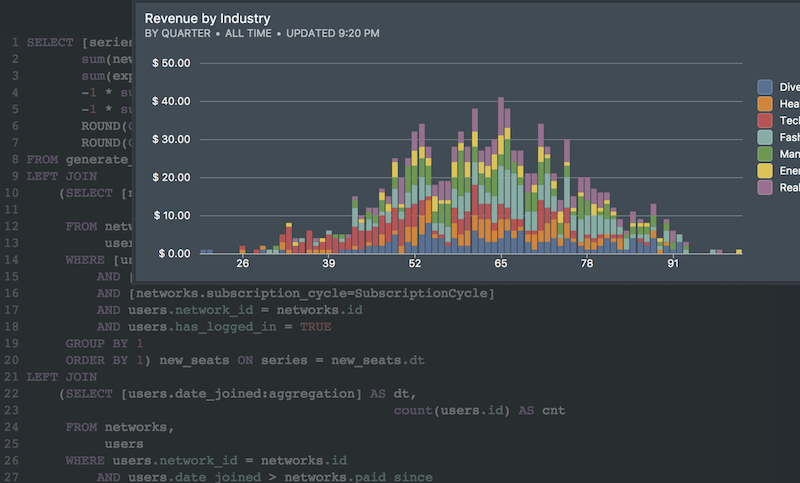

The data enrichment process is traditionally limited to three steps: extract, transform and load (ETL). Extract is the pulling data out of a source system, transform means validating the source data and converting it to the desired format, and load means storing the data at the destination. The pipeline doesn’t end there: a “data enrichment” step is often necessary to significantly improve the value of the integrated data. In this post, we describe four examples of data enrichment practices with links to tools that do them for you.

1. Currency Foreign Exchange

If you’re selling your product in multiple markets, it’s not uncommon to receive sales data in the currency local to that territory. The biggest example of this case is on the Apple Store, where each country has its own store – a store for the US, a store for Canada, etc. At a last count there were 155 different stores!

If you want to get your total sales for accounting purposes, you’ll have to convert each local currency to a common currency. Your options are:

- use a average or closing rate for the time period (weekly, monthly, quarterly, annual etc.)

- look up the exchange rate for that particular time period, if available

- if a direct conversion is not available, triangulate the rate from two other currencies

Historical currency rates are available from Open Exchange Rates, XE, Oanda, Currency Layer amongst other sources.

2. Forward and Reverse Geocoding

Forward geocoding means you’re starting with an human readable address and are seeking coordinates. Reverse geocoding does the opposite – coordinates to addresses.

There are a lot of services out there to do both forward as well as reverse geocoding. We’ve seen good results from Google Maps Geocoding API and Mapbox’s geocoder.

A closely related enrichment feature is IP geocoding, which is finding the location (city, state, country) an ip address maps to. You can use Free GeoIP for this purpose which has generous limits.

You’ll find that while these services have generous quotes for one-off geocoding, bulk and batch calls require a paid subscription.

3. User Agent extraction

Your logs have valuable information about the kinds of devices and browsers that access your service. For example, Safari on the iPad uses the following user agent:

Mozilla/5.0 (iPad; U; CPU OS 3_2_1 like Mac OS X; en-us) AppleWebKit/531.21.10 (KHTML, like Gecko) Mobile/7B405

Unfortunately, the user agent string is not easy to parse without the use of an external library. The good news is that this problem is so common that there are open source libraries out there that do exactly this. Here’s one for Python and one for Javascript.

You’ll want to store the browser, OS and device at the least which would come handy to group your analytics by these dimensions.

4. Campaign and Marketing Attribution

Related to the user agent extraction procedure described above is extracting parameters for marketing attribution. You definitely want to attribute your data to specific marketing campaigns and find out the most successful ones.

UTM parameters is one of the most common ways marketers use to tag specific campaigns. At the least you’ll want to extract campaign source (organic, newsletter, etc.), campaign medium (email, CPC, etc.) and campaign name.

Now, Your turn

If you’ve had to enrich your data in some other way, feel free to share your method in the comments below. Thanks!